NVIDIA certainly wasn't idle in the last two years, that much is clear. Their jump from 12nm to 8nm should set the average standard for what we should expect from moving nodes while also improving on the generation. This generational leap is what we should have seen from the 20xx series, which now seems like overpriced junk - so sorry for anyone who bought them in the last 6 months and can't return them. Let's go into a bit of history and detail.

The AMD side: Shrinking 14nm to 7nm

Three years ago in 2017, AMD RTG tried to even the playing field by moving from 14nm to 7nm, and succeeded. Their new RX Vega generation, while extremely power hungry, did improve performance across the board by roughly 30-75%, depending on what you looked at. And in 2019 they improved on that, with the RX 5000 series - except this time we saw practically no (<5%) performance increase, but they did cut down on heat generation and power draw quite a lot.

Unfortunately AMD RTG forgot to improve all the other areas: drivers are still pretty bad, video encoding is even worse, and the feature set is still lacking at the price point. Their top of the line RX 5700 XT at ~350,- € is equal with a RTX 2070 at ~350,- €, while providing no raytracing (RTX), machine learning acceleration (Tensor), or good video encoding and decoding support. AMD RTG bet on the competition not moving forward much, which is their signature move at this point.

The NVIDIA side: Shrinking 12nm to 8nm

And in comes NVIDIA and completely shatters that bet with their RTX 30xx launch, showing a nearly 100% improved performance across the board compared to the 20xx series - without actually increasing the TDP by much. Everything was upgraded, except for NVENC which is already pretty damn good, so not only do you get double the performance for less money, you also get new features on top of that.

One of the new features is AV1 decoding support for up to 8K60 HDR content, which paves the way for future content production and consumption. The other is RTX IO, a way to do on-GPU decoding and decompression of content, which offloads the decoding and decompression of textures from the CPU to the GPU - eliminating a transfer between nodes in the ideal case. We can only wait and see what the future has to offer, and if there's going to be Ti or Super models once again (though I highly doubt it this time).

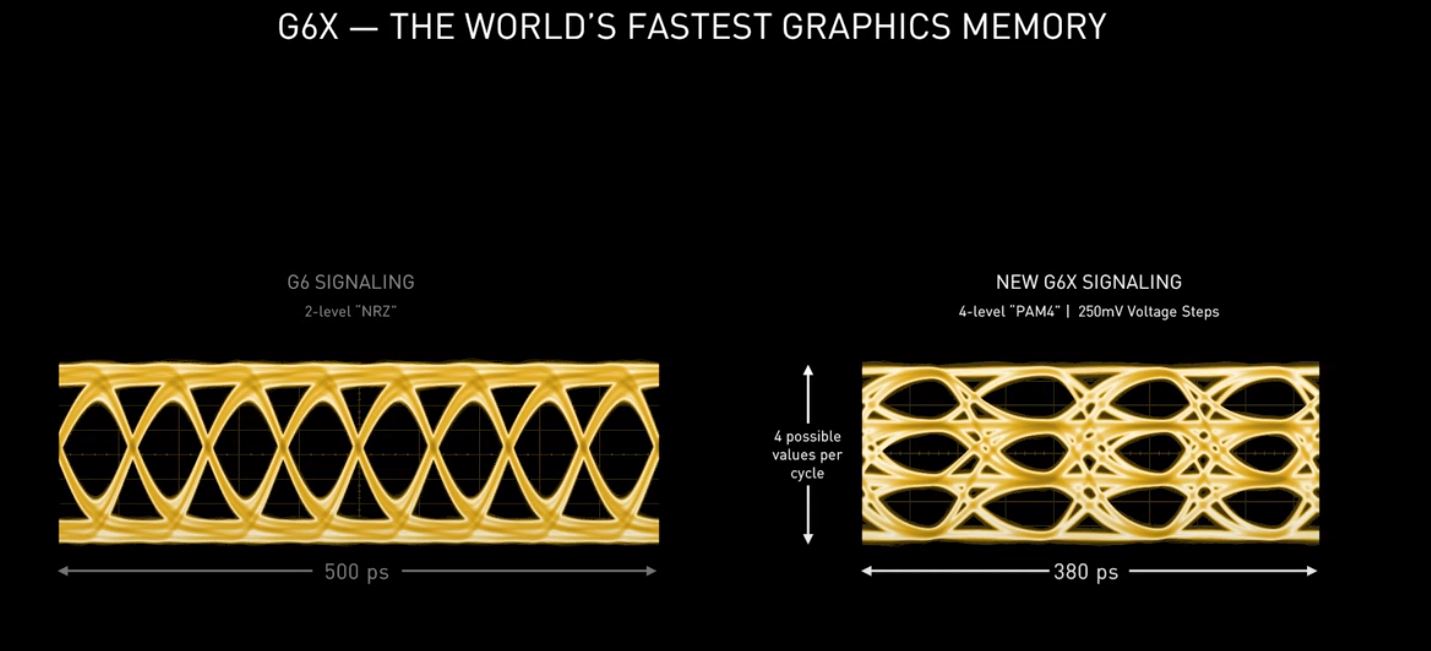

The Weird Parts: PAM4 memory signaling instead of NRZ

This slide actually doesn't make a lot of sense to the generic end-user, but it is one of the most important parts of everything. Basically NVIDIA doubled the data rate of their memory interface, without actually increasing the frequency. Instead of transmitting 1 bit per cycle, it's now transmitting 2 bits per cycle.

In order to actually do this, the 30xx series actually needs to have good enough power isolation and needs to be unaffected by sudden power drops caused by other devices or even itself - a massive downside that increases the price and has its own limitations. It also likely means that the 30xx series will not overclock as high as the previous GPUs would, due to the memory being much more sensitive to voltage differences than before.

To put it in terms everyone understands, the new way of accessing memory allows NVIDIA to literally do more with less. Increasing the amount of bits transferred per cycle from 1 to 2 is quite literally doubling the effective data rate, so not only can they transfer more data, it also takes half the amount of time to access data. A game with very large textures can be expected to suddenly run up to twice as fast, just with this change alone.

My thoughs on the RTX 30xx Series

Raytracing has been really fun to play with, and even my 2080 Ti manages around 20-21 fps at 2560x1440, with all effects turned on. So I'm really excited to see a GPU that can do twice that and push the raytraced view to an actual playable amount of frames, without the need of DLSS - DLSS is nice and all, but it does have very obvious issues, even in DLSS 2.0.

I'll be waiting for AIB partners to come up with their own designs, and then see real world performance from reviewers such as Linus Tech Tips, Gamers Nexus and similar. So until I see any of that, I'm sticking with my tuned RTX 2080 Ti.